Third-party components in frameworks like GitHub Actions pose risks due to privileged access and limited oversight

Practical Tips for Supply Chain Security in CI/CD: Mitigating Risks from Third-Party GitHub Actions

GitHub is more than a code-hosting platform—it’s a robust CI/CD system that streamlines testing, building, and deploying applications.

Third-party “actions” enhance workflow automation, but they can pose security risks. For example, the popular GitHub Action “tj-actions/changed-files” action was recently compromised, potentially exposing sensitive data.

In this blog post, we’ll provide hands-on tips to help you reduce such risks.

The importance of GitHub Actions security

Developers can perform GitHub automations by writing workflow files that trigger on events. For example due to a new pull request or a manual release or similar. These workflows are often highly privileged, making GitHub Actions security an important topic for anyone using such automations.

A workflow could look something like this:

name: build-and-deploy

on:

push:

branches:

- main

jobs:

build-and-deploy:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- uses: actions/setup-node@v4

# ... more build and test steps

- name: deploy

uses: azure/some-deploy-action@v2

with:

deploy-secret: ${{ secrets.DEPLOY_SECRET }}

verify-deploy:

# ...The workflow files are organized into jobs and each job has multiples steps. Steps typically come in the form of an actions, which are components you can use to perform common tasks. Many of the most popular actions come from GitHub, but many are from third parties.

In the above example we use the actions “actions/checkout@v4” and “actions/setup-node@v4“. They come from the GitHub managed “actions” organization, but the made up Azure deploy action comes from another organization. You could likewise use actions from any repository on GitHub. GitHub published actions and third-party actions will run in the same type of privileged context and will often have access to sensitive secrets.

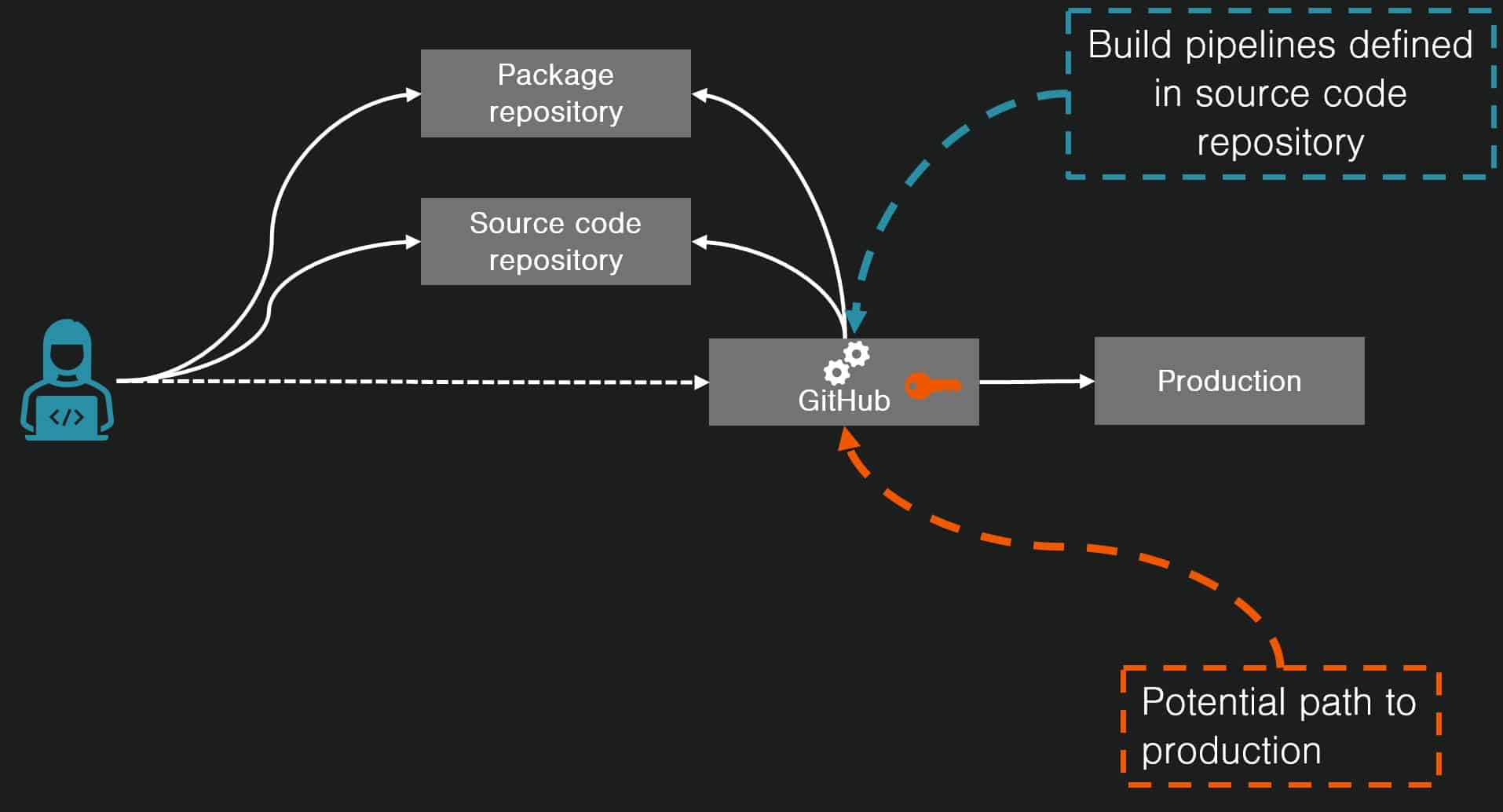

If actions become malicious, they can risk the whole production environment and customer data:

- If you are packaging a binary for separate deploy, that binary may become infected.

- If you are deploying to production directly from GitHub, privileged access tokens could leak. Alternatively, the threat actor might be able to deploy other/additional applications from the deploy workflow.

- If you are using Infrastructure-as-Code (IaC), you are likely to give GitHub very high infrastructural access to your cloud account. In such cases, malicious code may be able to affect other infrastructure beyond the specific app.

The recent attack on tj-actions/changed-files

A threat actor managed to compromise the popular third-party GitHub Action tj-actions/changed-files. From analysis it seems the malicious code dumped memory and extracted secrets from workflows that used it. The secrets were written to the build log. Evidence has later been uncovered that this was likely a multi-stage attack, and that the final target for the attack could have been Coinbase. For more details on the attack, se Unit 42’s blog post.

If the secrets were “only” dumped to the workflow log, this is a thankful risk reduction for many enterprise users. One should still rotate secrets, but the situation for public repositories is much more problematic. The secondary effects can even become hard to trace back to this attack, and they could be worse. If, for example release or deployment secrets for open-source projects are leaked that can lead to further breaches and supply-chain attacks down the line. Hopefully many projects noticed in a timely manner and took appropriate measures.

This is not the first case of an attack towards third-party GitHub actions and will certainly not be the last. While this seems to have been a targeted attack towards an innocent publisher, there are also other risks to consider. One such example is typo squatters (naming actions similarly to real ones, in the hopes of being mistakenly used). Another is malicious publishers (either initially, or by joining or taking over a project).

What you can do to reduce the risk of becoming a victim of malicious GitHub actions

As usual when modeling risk we consider two main components: probability and impact.

- How can we reduce the probability of one of our dependencies being malicious or vulnerable?

- How can we reduce the impact of such an event, under the very real assumption that such events can happen.

We’ll go into eight mitigations that can improve your resilience and overall GitHub Actions security:

- Audit and reduce use of third-party actions (reducing probability)

- Limit which actions are allowed on the organization level (reducing probability)

- Pin actions to a SHA hash (reducing probability)

- Use separate jobs or workflows for sensitive operations (reducing impact)

- Bind secrets to GitHub Environments and environments to jobs (reducing impact)

- Using temporary tokens for deploys using OpenID Connect (reducing impact)

- Bind OIDC to environments instead of branches (reducing impact)

- Scan workflows with static analysis tools (SAST) to find issues such as unnecessary secret activation (reducing impact)

Using GitHub for CI/CD can be complex, and there are more things to consider than what is listed. But by understanding and considering these measures, you should find yourself in an above average security stance when it comes to malicious actions. If you want to take it to the next level after this, you can for example look into supply-chain assurance using the SLSA project. Or perhaps contact specialists such as Truesec for further help.

Note: Certain features/protections mentioned require a paid Enterprise plan (or having a public repository). For private repositories on a Teams plan, there are fewer protections available.

Mitigation 1: Audit and reduce use of third-party actions

Just like with all types of dependencies, the cornerstone of GitHub Actions security is to remove things that are too risky or not needed. The bar should be high for bringing in GitHub actions as they are often running in quite privileged contexts. You should either really benefit from the action or really trust the author. In many cases, you could solve the same thing with a few lines of code in the workflow. Many could probably make do with a bit of bash and ‘git diff’ instead of a separate action.

Mitigation 2: Limit which actions are allowed on the organization level

Use the “Allow specified actions and reusable workflows” feature to limit which actions can be used in your organization.

You can specify publishers or actions you trust, down to even pinning them to a SHA hash (rarely practical at the org level).

Mitigation 3: Pin actions to a SHA hash

# Instead of this:

uses: azure/some-deploy-action@v2

# Do this:

uses: azure/some-deploy-action@247835779184621ab13782ac328988703583285aThis is an often-recommended mitigation but can be difficult to achieve. It might require quite frequent updates to the workflows that reference the actions if you want feature updates and bug fixes.

If it’s a popular action it might also lead to inconsistencies/drift in the organization where different workflows use different versions. It also comes with the caveat that even if we pin to a hash, the action might not be immutable. It might load code dynamically (a slightly false sense of security). Still, it’s better than referencing a tag. A a tag can change what it points to (being benign one day and malicious the next).

You can use tooling to automatically create PRs for updates, but if those are merged without review, you are close to the same risk situation as if you just pointed to a tag.

One compromise can be to move the usage of the action to a shared or composite workflow. That way you could reference using a tag internally, and have the shared workflow update the pinned hash as needed.

Mitigation 4: Use separate jobs or workflows for sensitive operations

jobs:

test: # ...

package: # ...

deploy:

needs: [test, package]

# ...Unless you really need to do many things in the same job, there can be value in using separate GitHub jobs for separate work. That way compromised code in one job might not have as big effect on work in other jobs. In some cases, it can even make sense to a workflow into multiple workflows.

In many cases, steps/actions do not need to contribute to the build output or later artifacts and can instead be run in a separate job that other jobs depend on (instead of being steps in the same job). There can also be performance gains by running certain jobs in parallel as well as better modularity by having clearer contracts between parts of the workflow.

If you are using GitHub hosted runners, each job in a workflow is run in a separate virtual machine. This gives some isolation between jobs (but is not a malware sandbox where you should run untrusted code).

Job separation can limit immediate secrets exposure and in general reduce what malicious code might be able to achieve. A malicious action could otherwise for example simply inject code into the local source or build directory to in the end achieve malicious code execution in the production environment.

The level of isolation

The very useful GitHub actions hardening guide gives varying descriptions on the isolation level:

- When mentioning third-party actions it says

“The individual jobs in a workflow can interact with (and compromise) other jobs….” - When mentioning self-hosted vs GitHub-hosted runners it says

“..ephemeral and clean isolated virtual machines, meaning there is no way to persistently compromise this environment, or otherwise gain access to more information than was placed in this environment during the bootstrap process.”.

Security likely depending on what was placed there during bootstrap.

Even if there can be certain exposures and escalation paths between jobs, most developer-targeting malicious actions and third-party dependencies (malicious “postinstall” scripts and similar) will not perform advanced reconnaissance and escalation. The overall risk of exploitation is effectively lowered.

Addition: As Praetorian mentions in their writeup regarding a CodeQL supply-chain vulnerability dubbed “CodeQLEAKED”, the branch level build cache can be an attack vector to break job isolation. Mitigations will vary, but one should be aware of the risk. This is an area where GitHub could improve to have more secure defaults or permission granularity.

Mitigation 5: Bind secrets to GitHub Environments (and environments to jobs)

Note: Environments are not the same as “Environment variables”. Avoid putting secrets in environment variables!

jobs:

deploy:

runs-on: ubuntu-latest

environment: production

steps:

- uses: azure/some-deploy-action@v2

with:

# This is an environment secret.

# We've set up our environment production to only

# activate for the main branch, and after review

deploy-secret: ${{ secrets.DEPLOY_SECRET }}

By setting secrets as environment secrets instead of repository or organization secrets, you can limit accidentally referencing secrets where the environment is not activated. But most importantly, you can set protection rules for the environment.

For example, you can set that the environment can only be activated when the workflow is running on the main branch. That way, a pull request could not even reference the secret.

Mitigation 6: Using temporary tokens for deploys using OpenID Connect (OIDC)

For many cloud providers, GitHub supports generating temporary tokens using OpenID Connect (OIDC) instead of using persistent secrets.

For example, you can assume roles in AWS using this syntax:

permissions:

id-token: write

contents: read

steps:

- name: configure aws credentials

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: arn:aws:iam::12345:role/MyRole

aws-region: ${{ env.AWS_REGION }}Where we have previously configured an OpenID Connect provider and set up a role called MyRole in our account 12345 like this:

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::1234:oidc-provider/token.actions.githubusercontent.com"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"token.actions.githubusercontent.com:aud": "sts.amazonaws.com",

"token.actions.githubusercontent.com:sub": "repo:Org/Repo:ref:refs/heads/Branch"

}

}The assume role policy says that only if the request comes from a specific repo and branch will the role be possible to assume. The role will then have whatever permissions are needed to deploy to AWS in this context.

This means that there’s no persistent GitHub secret to be stolen. Instead there will be short-lived access tokens in memory (for some definition of “short-lived”). This is generally a better situation.

You can set up more advanced rules for when roles can be assumed, one of which is our next mitigation…

Mitigation 7: Bind OIDC to environments instead of branches

The most common scenario is for OIDC trust to be bound to GitHub calling from a certain branch (such as main). That’s good, but we’re still in a similar scenario to what we wanted to fix with Mitigation 5. We don’t want all jobs on a branch to be able to assume the role.

We could instead set up the trust rules at the cloud provider to be bound to an environment. Then we can make it so only jobs that activate the environment can ask for an access token (and access cloud resources). Again, we set up activation requirements for the environment to limit when it can be used.

If we’ve put our third-party action in a separate job in the workflow, it would be denied even if it tried to make the OIDC API calls to get a token.

Mitigation 8: Scan workflows with static analysis tools (SAST) to find issues such as unnecessary secret activation

If you have the luxury of having CodeQL available, make sure you’ve enabled the recently introduced comprehensive support for scanning workflows. It’s an easy way to improve your GitHub Actions security by scanning for known dangerous workflow patterns.

As a relevant example, the “Excessive Secrets Exposure” rule can find cases where GitHub cannot determine which secrets are needed for a workflow. If that happens, all secrets will be loaded in memory (limited to secrets that would have been possible to reference).

A typical case where this happens is if you have a matrix triggered workflow that references secrets dynamically using the secret[…] syntax. In that case GitHub cannot determine which secrets will be needed, and they are all made available.

GitHub Actions security beyond actions: Similar things could happen to GitHub Apps

GitHub Actions Security for workflows is not the only place where you can give third parties privileged access to your repository and secrets. When you are auditing your environment and posture, make sure to review what GitHub Apps (and OAuth apps). Check which apps you have enabled, and what level of access they have. Apps can often be scoped to a subset of repositories, but it’s not possible to give the apps less access than what they request at installation.

Final words

Hopefully in the future immutable actions (Public preview) and/or lock files will reduce some of these risks.

The current use of tags and even SHA hashes are not without problems. Until we have better options, we must be extra cautious with adding third-party actions to our workflows.

Sadly, even when we have immutable actions in the future we will still need to be cautions when adding third-party code.

CI/CD processes are business-critical process and paths to production systems and customer data.

GitHub Actions security becomes crucial to organizations using workflows.

Stay ahead with cyber insights

Newsletter

Stay ahead in cybersecurity! Sign up for Truesec’s newsletter to receive the latest insights, expert tips, and industry news directly to your inbox. Join our community of professionals and stay informed about emerging threats, best practices, and exclusive updates from Truesec.